Police use of facial recognition technology is being challenged in court for the first time in the UK.

Human and civil rights campaigners have backed the action after several police forces in England and Wales trialled the technology.

Ed Bridges, from Cardiff, will be represented by civil rights group Liberty at the High Court as he challenges use of the technology by South Wales Police.

We're taking the police to court on Tuesday to end the unlawful use of #FacialRecognition tech in public.It is inaccurate.It is discriminatory.It violates everyone's rights.It has no place on our streets. pic.twitter.com/h4eyzIpnHp

— Liberty (@libertyhq) May 19, 2019

Earlier this month, officials in San Francisco voted to ban facial recognition systems as some campaigners branded it “Big Brother technology”.

Here’s how facial recognition works – and why it is divisive.

– How does it work?

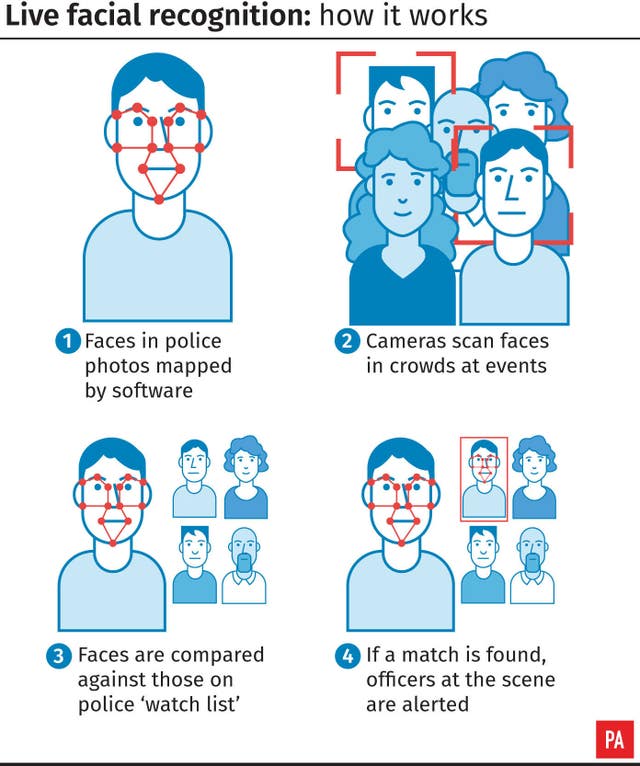

Technology trialled by the Metropolitan Police in London uses special cameras to scan the structure of faces in a crowd of people.

The system – called NeoFace and created by NEC – then creates a digital image and compares the result against a “watch list” made up of pictures of people who have been taken into police custody.

Not everybody on police watch lists are wanted – they can include missing people and other persons of interest.

If a match is found, officers at the scene where cameras are set up are alerted.

– How long has it been used?

The Met has used the technology several times since 2016, according to its website.

This includes at Notting Hill Carnival in 2016 and 2017, Remembrance Day in 2017, Port of Hull docks assisting Humberside Police last year, and at Stratford transport hub for two days in June and July.

South Wales Police first piloted its technology during the 2017 Champions League final week in Cardiff, making it the first UK force to use it at a large sporting event.

Liberty claims South Wales Police has used the technology about 50 times.

– Why is it controversial?

Campaigners say facial recognition breaches civil rights.

Liberty said scanning and storing biometric data “as we go about our lives is a gross violation of privacy”.

Big Brother Watch, a privacy and civil liberty group, said “the notion of live facial recognition turning citizens into walking ID cards is chilling”.

Some claim the technology will deter people from expressing views in public or going to peaceful protests.

It is also claimed facial recognition is least accurate when it attempts to identify black people and women.

The ban in San Francisco was part of broader legislation that requires city departments to establish usage policies and obtain board approval for surveillance technologies.

City supervisor Aaron Peskin, who championed the legislation, said: “This is really about saying, ‘We can have security without being a security state. We can have good policing without being a police state’.”

– What do the police say?

The Met says its trials aim to discover whether the technology is an effective way to “deter and prevent crime and bring to justice wanted criminals”.

“We’re concerned that what we do conforms to the law, but also takes into account ethical concerns and respects human rights,” the force said.

South Wales Police Assistant Chief Constable Richard Lewis has said: “We are very cognisant of concerns about privacy and we are building in checks and balances into our methodology to reassure the public that the approach we take is justified and proportionate.”

Comments: Our rules

We want our comments to be a lively and valuable part of our community - a place where readers can debate and engage with the most important local issues. The ability to comment on our stories is a privilege, not a right, however, and that privilege may be withdrawn if it is abused or misused.

Please report any comments that break our rules.

Read the rules hereComments are closed on this article